The ultimate LangChain series — Projects structure

Learn how the basic structure of a LangChain project looks

Table of contents

In the previous guide, we learned how properly set up a Python environment for developing with LangChain; check it out if you landed directly on this guide since it prepares the environment and the necessary packages.

👇 🦜⛓️

Now it's time to go over the general structure so you can have a good foundation to build any language model-powered application. This guide is again short and to the point.

Basic LangChain project structure

As we saw during the environment setup, LangChain has many modules available, and it might be relatively confusing to understand what to use and in what order.

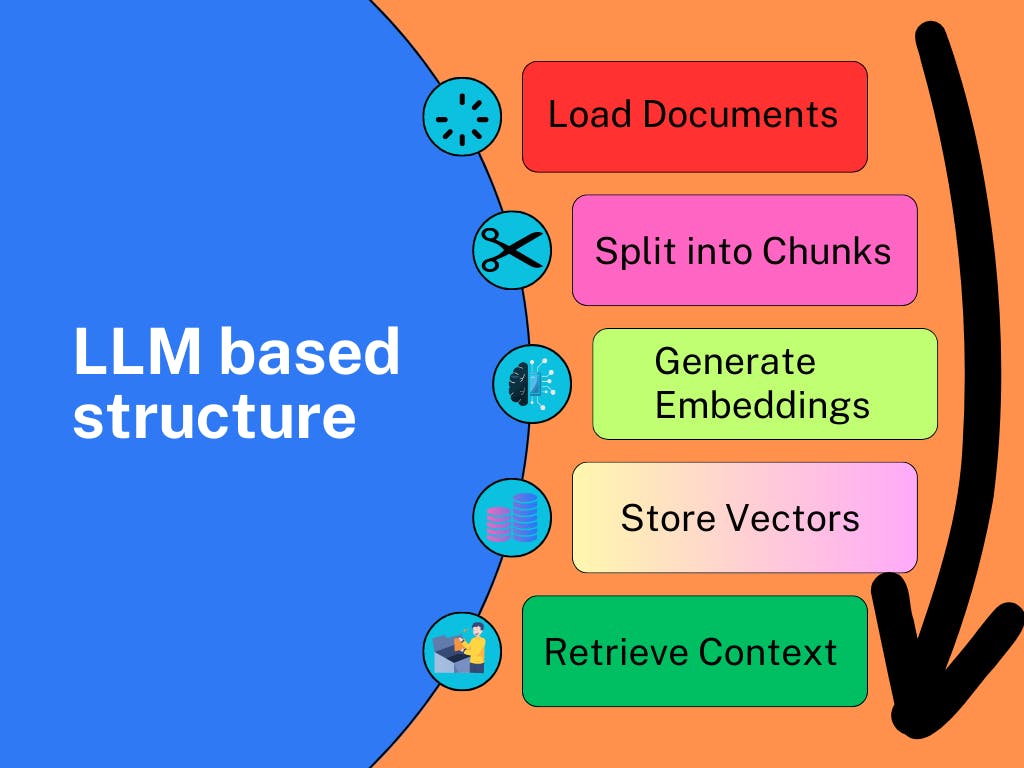

Here we can see the general structure what a typical application:

We can easily take care of each step in a few lines of code thanks to LangChain, as it does a great job abstracting complex tasks.

Load Documents: This is the initial step where the raw data (documents) are loaded into the system. These documents could be in various formats, such as text files, PDFs, HTML files, and so on. LangChain offers a vast suite of document loaders, including images.

Check the document loaders availble on the LangChain docs.

Split into Chunks: Once the documents are loaded, they are split into smaller, digestible chunks. This is done because processing smaller pieces of text is more efficient than large documents. The size of these chunks can vary depending on the specific requirements of the model and the kind of data; we can choose between many text splitters like Code text splitter, Recursive Character for general data, like a mix of text and code, NLTK for natural language, and more.

Find all the text splitters available in the LangChain docs.

Generate Embeddings: After the documents are split into chunks, the next step is to convert these chunks into a format that the model can understand. This is done by generating embedding vectors for each chunk. An embedding vector is a numerical representation of the chunk's content. These vectors capture the semantic meaning of the chunks and allow the model to understand the relationships between different chunks. There are many embedding models available depending on your need.

Store Vectors: Once the embedding vectors are generated, they are stored in a database or vector store. This is a specialized type of database designed to handle high-dimensional vector data. Storing the vectors in this way allows for efficient retrieval and comparison of vectors, which is essential for the next step.

Vector stores available on LangChain.

Retrieve Context: When a user query is received, the system needs to determine which chunks are relevant to the query. This is done by retrieving the appropriate context from the database. The system compares the query to the stored vectors to find the chunks that are most similar to the query. These chunks are then used to generate the model's response.

This overview provides a fundamental understanding of the basic mechanics at play. While the explanation is simplified, LangChain performs complex operations behind the scenes, enabling you to easily build powerful applications.

The applications we'll develop throughout this series are deceptively simple in terms of code and structure. However, they offer significant capabilities, demonstrating that simplicity in design does not limit functionality or potential. This approach allows you to grasp the core concepts while also appreciating the power and versatility of LangChain.

In the next article, we'll explore how to use document loaders and text splitters, which is the first important part of your language model-based application.

We'll break down every step, and at the end, we'll build an application that allows you to 'chat' with all of the articles in my blog! And maybe build some more apps for different use cases 🤖.